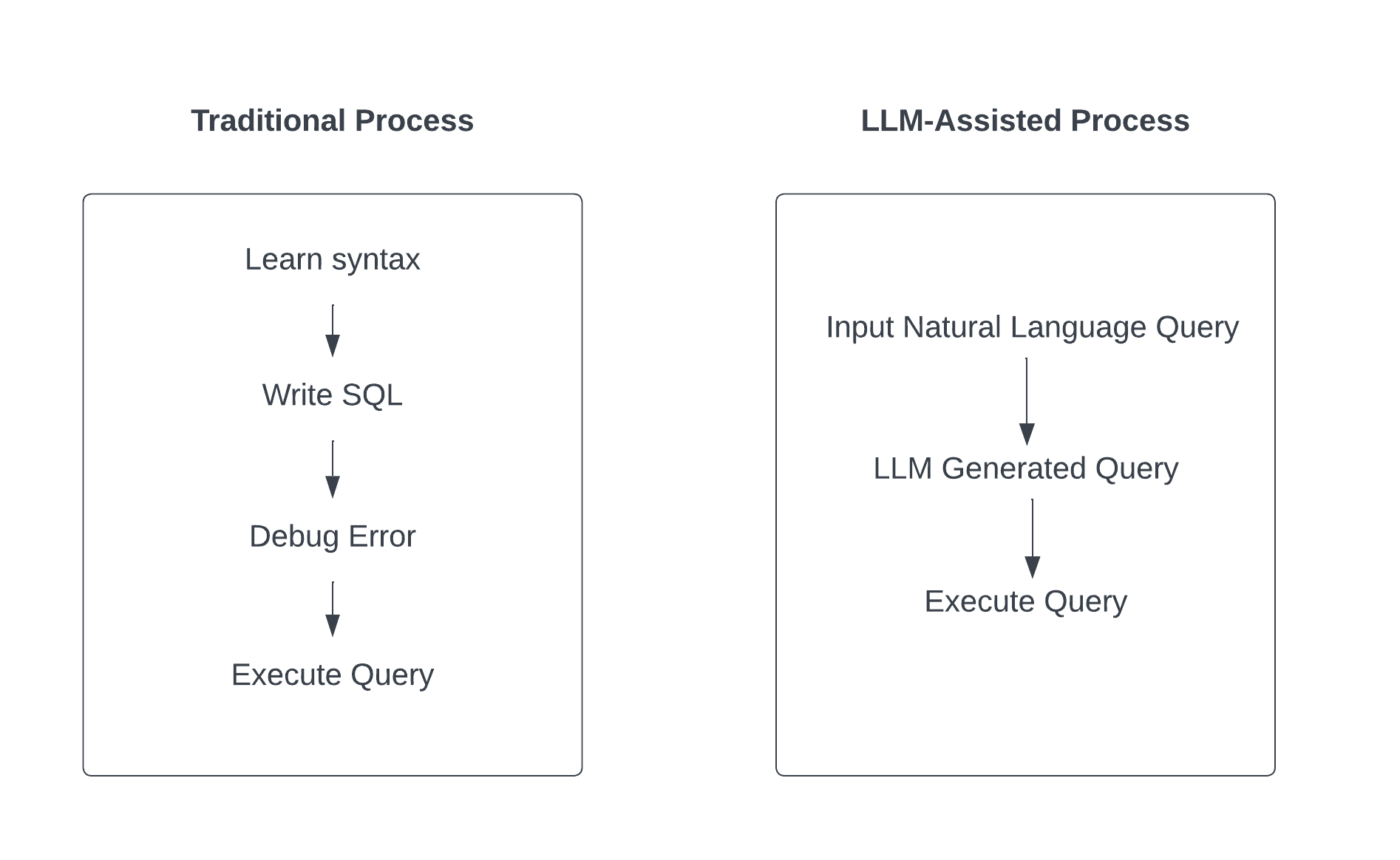

In today’s data-driven world, the ability to efficiently interact with databases is crucial for businesses of all sizes. Traditional methods of writing SQL queries can be time-consuming and require a deep understanding of database structures. However, advancements in artificial intelligence (AI) are revolutionizing this process, making it easier and more intuitive than ever before.

Imagine having a super-smart assistant that can talk to databases in their own language, making it easier for us to get the information we need.

We’ll explore how AI-powered assistants can streamline the process of SQL query generation, empowering users to extract valuable insights from their data with minimal effort. We will guide you through the process of selecting the right database, developing the schema, and utilizing natural language to load data and query databases.

We’ll explore how AI-powered assistants can streamline the process of SQL query generation, empowering users to extract valuable insights from their data with minimal effort. We will guide you through the process of selecting the right database, developing the schema, and utilizing natural language to load data and query databases.

Choose the SQL database, create the schema, and load the data.

Choosing the Right Database:

- Picture PostgreSQL, MySQL, and SQLAlchemy as different types of toolboxes for your data.

- We chose MySQL for its ease of use, speed, and compatibility with various project sizes.

- PostgreSQL is actually the crowd favourite for its robust features, but for this guide, we’re keeping it simple with MySQL.

SQL and Its Role in Data Management:

- SQL stands for Structured Query Language, you can instruct them on what data to store, locate, or modify.

- It is essential because most of the app uses a database, and SQL is the go-to language to manage all that data.

Flow of NLP to generate the SQL Query.

Now comes the exciting part: leveraging natural language processing (NLP) techniques to generate SQL queries. We’ll delve more into the architecture of AI agents, their capabilities, and real-world applications.

Agents & SQL Agent:

What is an AI Agent?

An AI agent is a computer program that simulates some aspects of human intelligence to perform tasks. It can make decisions, interact with its environment, or solve problems without continuous human guidance.

- Capabilities: Decision-making: AI agents can evaluate situations and make choices based on the data they have or the rules programmed into them.

- Problem-solving: They are capable of navigating through complex scenarios to achieve specific goals or find solutions to problems.

- Learning: Some AI agents have the ability to learn from data or past experiences, improving their performance over time. We often refer to this as machine learning.

Types of AI agents:

- Simple reflex agents: React to the current situation or environment based on pre-defined rules without considering the past or future.

- Model-based reflex agents: Take into account the current state of the world and how it changes in response to actions, allowing for a more informed decision-making process.

- Goal-based agents: Operate by considering future actions and their outcomes to achieve specific goals.

- Utility-based agents: Evaluate the success of their actions based on a utility function, aiming to maximize their satisfaction or benefit.

- Learning agents: Improve their performance and adapt to new situations by learning from their environment and past actions.

Applications:

- Virtual assistants: how Siri or Alexa perform tasks or services for an individual.

- Autonomous vehicles: cars or drones that navigate and operate without human intervention.

- Recommendation systems: how Netflix or Amazon suggest products or movies based on your preferences.

- Healthcare: AI agents can assist in diagnosing diseases, predicting patient outcomes, or personalizing treatment plans.

Benefits:

- Efficiency: In many cases, they can automate and perform tasks faster and more accurately than humans.

- Availability: AI agents are available 24/7, providing consistent service without the need for breaks or sleep.

- Personalization: can customize experiences, recommendations, and interactions based on individual preferences.

Challenges:

- Ethical and privacy concerns: Carefully consider how AI agents use and share data.

- Dependence: Over-reliance on AI agents could impact human skills and employment.

- Complexity in development and maintenance: Creating and updating AI agents requires significant expertise and resources.

Example Use Case:

We will create a sales order schema for our project:

A well-designed schema lays the foundation for efficient data storage and retrieval. We’ll walk through the creation of a sales order schema, detailing the tables, relationships, and data types involved.

Schema:

A schema in a relational database functions as a blueprint, defining the structure and organization of your data. It includes details about tables, relationships, and data types, setting the stage for storing and retrieving data efficiently.

The SalesOrder schema is crafted to capture and reflect the intricacies of sales transactions. It’s designed to store everything from customer information and inventory status to detailed sales orders and supplier data.

The schema encompasses seven pivotal tables:

- Customer: Keeps track of customer details, purchase history, and contact information.

- Employee: Record information about the staff, including their roles, contact details, and salaries.

- InventoryLog: Monitors inventory changes, providing insights into stock levels and movement.

- LineItem: Detail each item in a sales order, including price and quantity.

- Product: The product catalog provides information about products, including their descriptions, prices, and stock quantities.

- SalesOrder: Central to the schema, this table records the sales transactions, including dates, statuses, and payment details.

- Supplier: Contains data on the vendors supplying the products, essential for managing the supply chain.

Schema Design:

1 CREATE DATABASE SalesOrderSchema;

2

3 USE SalesOrderSchema;

4

5 CREATE TABLE Customer (

6CustomerID INT AUTO_INCREMENT PRIMARY KEY,

7FirstName VARCHAR(100),

8LastName VARCHAR(100),

9Email VARCHAR(255),

10 Phone VARCHAR(20),

11 BillingAddress TEXT,

12 ShippingAddress TEXT,

13 CustomerSince DATE,

14 IsActive BOOLEAN

15 );

16

17 CREATE TABLE SalesOrder (

18 SalesOrderID INT AUTO_INCREMENT PRIMARY KEY,

19 CustomerID INT,

20 OrderDate DATE,

21 RequiredDate DATE,

22 ShippedDate DATE,

23 Status VARCHAR(50),

24 Comments TEXT,

25 PaymentMethod VARCHAR(50),

26 IsPaid BOOLEAN,

27 FOREIGN KEY (CustomerID) REFERENCES Customer(CustomerID)

28 );

29

30 CREATE TABLE Product (

31 ProductID INT AUTO_INCREMENT PRIMARY KEY,

32 ProductName VARCHAR(255),

33 Description TEXT,

34 UnitPrice DECIMAL(10, 2),

35 StockQuantity INT,

36 ReorderLevel INT,

37 Discontinued BOOLEAN

38 );

39

40 CREATE TABLE LineItem (

41 LineItemID INT AUTO_INCREMENT PRIMARY KEY,

42 SalesOrderID INT,

43 ProductID INT,

44 Quantity INT,

45 UnitPrice DECIMAL(10, 2),

46 Discount DECIMAL(10, 2),

47 TotalPrice DECIMAL(10, 2),

48 FOREIGN KEY (SalesOrderID) REFERENCES SalesOrder(SalesOrderID),

49 FOREIGN KEY (ProductID) REFERENCES Product(ProductID)

50 );

51

52 CREATE TABLE Employee (

53 EmployeeID INT AUTO_INCREMENT PRIMARY KEY,

54 FirstName VARCHAR(100),

55 LastName VARCHAR(100),

56 Email VARCHAR(255),

57 Phone VARCHAR(20),

58 HireDate DATE,

59 Position VARCHAR(100),

60 Salary DECIMAL(10, 2)

61 );

62

63 CREATE TABLE Supplier (

64 SupplierID INT AUTO_INCREMENT PRIMARY KEY,

65 CompanyName VARCHAR(255),

66 ContactName VARCHAR(100),

67 ContactTitle VARCHAR(50),

68 Address TEXT,

69 Phone VARCHAR(20),

70 Email VARCHAR(255)

71 );

72

73 CREATE TABLE InventoryLog (

74 LogID INT AUTO_INCREMENT PRIMARY KEY,

75 ProductID INT,

76 ChangeDate DATE,

77 QuantityChange INT,

78 Notes TEXT,

79 FOREIGN KEY (ProductID) REFERENCES Product(ProductID)

80 );

Load the data:

Once the schema is in place, we’ll explore methods for generating and loading data into the database. Using tools like Faker and Python, we’ll demonstrate how to populate tables with realistic data.

Install Faker via pip.

pip3 install faker

1 #Employee Table

2 import mysql.connector

3 from faker import Faker

4

5 # Initialize Faker

6 fake = Faker()

7

8 # Connect to MySQL

9 conn = mysql.connector.connect(

10 host="localhost",

11 user="root",

12 password="root",

13 database="SalesOrderSchema"

14 )

15 cursor = conn.cursor()

16

17 # Generate and insert 1000 employee records

18 for _ in range(1000):

19 first_name = fake.first_name()

20 last_name = fake.last_name()

21 email = fake.email()

22 phone = fake.phone_number()

23 if len(phone) > 20: # Truncate phone number if necessary

24 phone = phone[:20]

25 hire_date = fake.date_between(start_date='-5y', end_date='today')

26 position = fake.job()

27 salary = round(fake.random_number(digits=5), 2) # Generate a 5 digit salary

28

29 # Insert employee data

30 cursor.executemany("""

31 INSERT INTO Employee (FirstName, LastName, Email, Phone, HireDate, Position, Salary)

32 VALUES (%s, %s, %s, %s, %s, %s, %s)

33 """, (first_name, last_name, email, phone, hire_date, position, salary))

34

35

36 # Generate and insert data into the Product table

37 # Generate 1000 product records

38 product_name = fake.word().capitalize() + " " + fake.word().capitalize()

39 description = fake.sentence(nb_words=10)

40 unit_price = round(random.uniform(10, 500), 2) # Random price between $10 and $500

41 stock_quantity = random.randint(10, 1000) # Random stock quantity between 10 and 1000

42 reorder_level = random.randint(5, 50) # Random reorder level between 5 and 50

43 discontinued = random.choice([0, 1]) # Randomly choose between 0 (false) and 1 (true)

44 # Insert product data

46 cursor.executemany("""

47 INSERT INTO Product (ProductName, Description, UnitPrice, StockQuantity, ReorderLevel, Discontinued)

48 VALUES (%s, %s, %s, %s, %s, %s)

49 """, (product_name, description, unit_price, stock_quantity, reorder_level, discontinued))

50

51

52 # Insert data into SalesOrder

53 # Let's say we want to generate 1000 sales orders

54 customer_id = random.choice(customer_ids)

55 order_date = fake.date_between(start_date='-2y', end_date='today')

56 required_date = order_date + timedelta(days=random.randint(1, 30))

57 shipped_date = order_date + timedelta(days=random.randint(1, 30)) if random.choice([True, False]) else None

58 status = random.choice(['Pending', 'Completed', 'Shipped'])

59 is_paid = random.choice([True, False])

60

61 cursor.executemany("""

62 INSERT INTO SalesOrder (CustomerID, OrderDate, RequiredDate, ShippedDate, Status, IsPaid)

63 VALUES (%s, %s, %s, %s, %s, %s)

64 """, (customer_id, order_date, required_date, shipped_date, status, is_paid))

65

66

67 # Generate and insert data into the Supplier table

68 # Assuming you want to insert 1000 records

69 company_name = fake.company()

70 contact_name = fake.name()

71 contact_title = fake.job()

72 # Ensure ContactTitle does not exceed the column's max length, e.g., VARCHAR(50)

73 contact_title = contact_title[:50] if len(contact_title) > 50 else contact_title

74 address = fake.address().replace('\n', ', ') # Replace newlines with commas for address

75 phone = fake.phone_number()

76 # Ensure phone does not exceed the column's max length, e.g., VARCHAR(20)

77 phone = phone[:20] if len(phone) > 20 else phone

78 email = fake.email()

79

80 # Insert supplier data

81 cursor.executemany("""

82 INSERT INTO Supplier (CompanyName, ContactName, ContactTitle, Address, Phone, Email)

83 VALUES (%s, %s, %s, %s, %s, %s)

84 """, (company_name, contact_name, contact_title, address, phone, email))

85

86 # Generate and insert data

87 # Let's say we want to generate 100 records

88 first_name = fake.first_name()

89 last_name = fake.last_name()

90 email = fake.email()

91 phone = fake.phone_number()

92 if len(phone) > 20: # Assuming the 'Phone' column is VARCHAR(20)

93 phone = phone[:20] # Truncate phone number to fit into the column

94 address = fake.address()

95 customer_since = fake.date_between(start_date='-5y', end_date='today')

96 is_active = fake.boolean()

97

98 # Insert customer data

99 cursor.executemany ("""

100 INSERT INTO Customer (FirstName, LastName, Email, Phone, BillingAddress, ShippingAddress, CustomerSince, IsActive)

101 VALUES (%s, %s, %s, %s, %s, %s, %s, %s)

102 """, (first_name, last_name, email, phone, address, address, customer_since, is_active))

103

104

105 # Insert data into LineItem

106 # Assuming multiple line items per order

107 sales_order_id = random.choice(sales_order_ids)

108 product_id = random.choice(product_ids)

109 quantity = random.randint(1, 10)

110 unit_price = round(random.uniform(10, 100), 2) # Assuming you have this info or fetch it from Product table

111 total_price = quantity * unit_price

112

113 cursor.executemany("""

114 INSERT INTO LineItem (SalesOrderID, ProductID, Quantity, UnitPrice, TotalPrice)

115 VALUES (%s, %s, %s, %s, %s)

116 """, (sales_order_id, product_id, quantity, unit_price, total_price))

117

118

119 # Assuming you want to insert 1000 inventory log records

120 product_id = random.choice(product_ids) # Randomly select a product ID

121 change_date = fake.date_between(start_date="-1y", end_date="today")

122 quantity_change = random.randint(-100, 100) # Assuming inventory can increase or decrease

123 notes = "Inventory " + ("increased" if quantity_change > 0 else "decreased")

125

126 # Insert inventory log data

127 cursor.executemany("""

128 INSERT INTO InventoryLog (ProductID, ChangeDate, QuantityChange, Notes)

129 VALUES (%s, %s, %s, %s)

130 """, (product_id, change_date, quantity_change, notes))

131

132 # Commit the transaction

133 conn.commit()

134

135 # Close the cursor and connection

136 cursor.close()

137 conn.close()

138

139 print("1000 employee records inserted successfully.")

140 ---

Model_Code:

pip3 install streamlit langchain

1 import os

2 import streamlit as st

3 from langchain_openai import ChatOpenAI

4 from langchain_community.utilities import SQLDatabase

5 from langchain_community.agent_toolkits import create_sql_agent

6

7 # Set your OpenAI API key here

8 os.environ["OPENAI_API_KEY"] = "sk-XXXX" 9

10 # Directly using database connection details

11 host = "localhost"

12 user = "root"

13 password = "root"

14 database = "SalesOrderSchema"

15

16 # Setup database connection

17 db_uri = f"mysql+mysqlconnector://{user}:{password}@{host}/{database}"

18 db = SQLDatabase.from_uri(db_uri)

19 llm = ChatOpenAI(model="gpt-4", temperature=0)

20 agent_executor = create_sql_agent(llm, db=db, agent_type="openai-tools", verbose=True)

21

22 # Streamlit app layout

23 st.title('SQL Chatbot')

24

25 # User input

26 user_query = st.text_area("Enter your SQL-related query:", "List Top 10 Employees by Salary?")

27

28 if st.button('Submit'):

29 #try:

30 # Processing user input

31 #response = agent_executor.invoke(user_query)

32 #response = agent_executor.invoke({"query": user_query})

33 #if st.button('Submit'):

34 try:

35 # Processing user input

36 response = agent_executor.invoke({

37 "agent_scratchpad": "", # Assuming this needs to be an empty string if not used

38 "input": user_query # Changed from "query" to "input"

39 })

40 st.write("Response:")

41 st.json(response) # Use st.json to pretty print the response if it's a JSON

42 except Exception as e:

43 st.error(f"An error occurred: {e}")

45 #st.write("Response:")

46 #st.write(response)

47 #except Exception as e:

48 #st.error(f"An error occurred: {e}")

49

Code Overview:

- Initialize the LLM: Using GPT-4, you set up an instance ready to process inputs.

- Create a SQL Query Chain: This chain converts natural language questions into SQL queries by combining the LLM with your database.

- Define a System Message: This provides context about your database schema to help generate accurate SQL queries.

- Generate SQL Query: Converts a user’s natural language input (“Show me the total sales for each product last year”) into an executable SQL query.

- Execute SQL Query: Runs the generated query against the database to fetch the required data.

Explaining Code:

From 1 to 4, import the langchain and streamlit modules. The eighth line imports the Stream Lit interface. lines 16 to 20, connect the database with the created mock data. From the 28th line, attempt to capture the configuration for open AI responses.

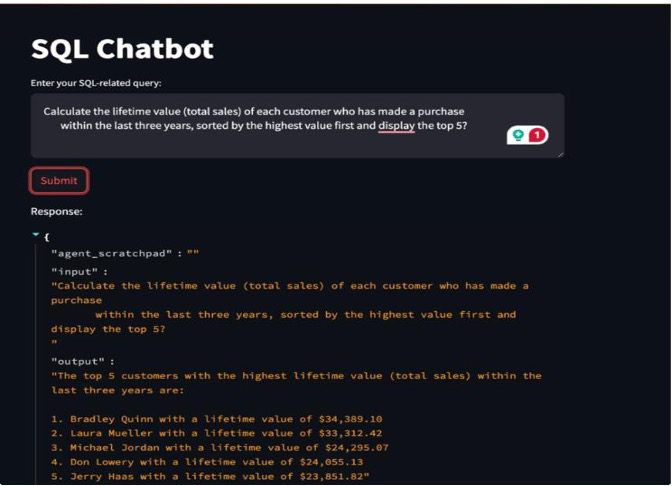

Stream–lit app Interface:

- Sets up a simple web interface title as ‘SQL Chatbot. The system offers a text area where users can input their SQL-related queries. It also features a submit button that allows users to execute their queries. Processing and displaying responses

- When the user clicks the submit button, the app attempts to process the query using the SQL agent. It formats and displays the SQL agent’s response as JSON in the Streamlit interface.

- If an error occurs during the process, an error message will be displayed. Error handling: The script includes a try-except block to catch and display errors that may occur during the query processing or response generation phase.

How to run the Stream-lit Python script:

streamlit run app.py

10 Replace app.py with the name of your Streamlit script file if it's different.

11

12 Access the web interface: After running the command, Streamlit will

13 start the server and provide you with a local URL, usually something

14 like http://localhost:8501. Open this URL in your web browser to view

15 your Streamlit application.

16

17 These steps will allow you to run and interact with any Streamlit script,

18 turning your Python scripts into interactive web applications easily.

Query1:

1 Calculate the lifetime value (total sales) of each customer who has made a

2 purchase within the last three years, sorted by the highest value first and 3 display the top 5?

Using Prompt Engineering:

We’ll discuss the concept of prompt engineering, which involves crafting input prompts to guide AI models in generating accurate SQL queries. We will also provide examples and best practices to illustrate this approach.

How It Works:

- Examples as Guides: By including examples of natural language queries alongside their correct SQL translations in the prompt, you essentially provide the model with a template to follow. This method uses the principle of few-shot learning, where the model uses the provided examples to understand and generalize the task at hand.

- Contextual Information: Adding information about the database schema, such as table names and relationships, can help the model generate more accurate SQL queries. This contextual backdrop aids the model in mapping natural language terms to their corresponding entities in the database.

- Task Description: Starting the prompt with a clear task description (e.g., “Translate the following natural language queries into SQL”) signals the model’s expected task, priming it for better performance on the specified task.

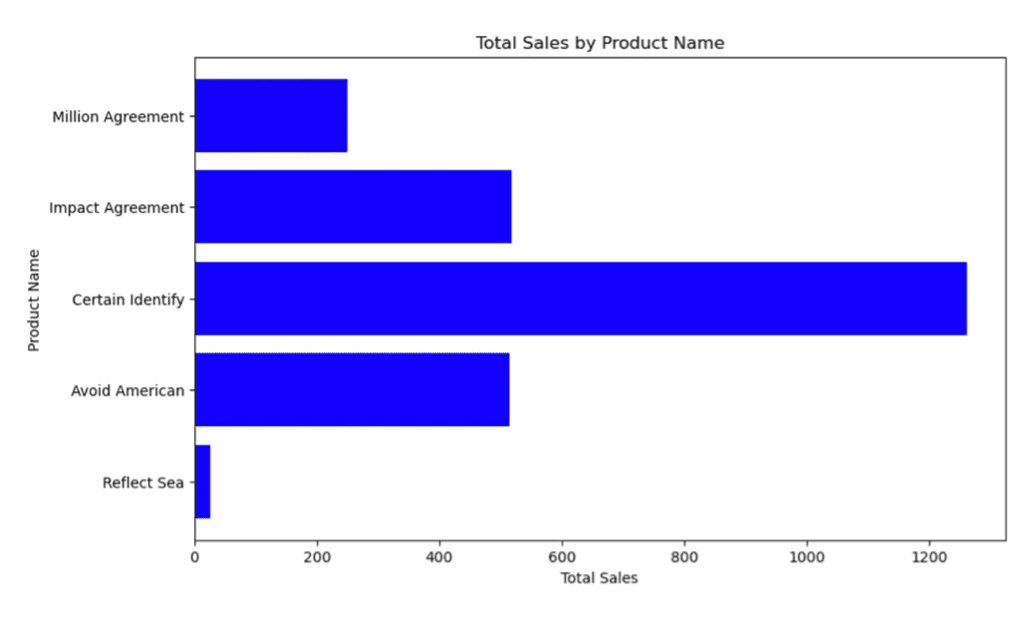

LLM produces the following output:

SELECT P.ProductName, SUM(L.TotalPrice) AS TotalSales 4 FROM Product P JOIN LineItem L ON P.ProductID = L.ProductID JOIN SalesOrder S ON L.SalesOrderID = S.SalesOrderID WHERE YEAR(S.OrderDate) = YEAR(CURDATE()) – 1 GROUP BY P.ProductName;

Example Query Inputs and Outputs:

1 You are a MySQL expert. Given an input question, create a syntactically

2 correct MySQL query to run. Unless otherwise specified, do not return more

3 than 10 rows.

4

5 Here is the relevant table info:

6 - Customer (CustomerID INT, FirstName VARCHAR(100), LastName VARCHAR(100), Email VARCHAR(255), Phone VARCHAR(20), BillingAddress TEXT, ShippingAddress TEXT, Cust 7.Employee (EmployeeID INT, FirstName VARCHAR(100), LastName VARCHAR(100), Email VARCHAR(255), Phone VARCHAR(20), HireDate DATE, Position VARCHAR(100), Salary DE 8.InventoryLog (LogID INT, ProductID INT, ChangeDate DATE, QuantityChange INT, Notes TEXT)

9 - LineItem (LineItemID INT, SalesOrderID INT, ProductID INT, Quantity INT, UnitPrice DECIMAL, Discount DECIMAL, TotalPrice DECIMAL)

10 - Product (ProductID INT, ProductName VARCHAR(255), Description TEXT, UnitPrice DECIMAL, StockQuantity INT, ReorderLevel INT, Discontinued TINYINT)

11 - SalesOrder (SalesOrderID INT, CustomerID INT, OrderDate DATE, RequiredDate DATE, ShippedDate DATE, Status VARCHAR(50), Comments TEXT, PaymentMethod VARCHAR(50)

12 - Supplier (SupplierID INT, CompanyName VARCHAR(255), ContactName VARCHAR(100), ContactTitle VARCHAR(50), Address TEXT, Phone VARCHAR(20), Email VARCHAR(255))

13

14 Below are a number of examples of questions and their corresponding SQL queries.

15

16 User input: List all customers.

17 SQL query: SELECT * FROM Customer;

18

19 User input: Find all orders placed by customer with ID 1.

20 SQL query: SELECT * FROM SalesOrder WHERE CustomerID = 1;

21

22 User input: List all products currently in stock.

23 SQL query: SELECT * FROM Product WHERE StockQuantity > 0;

24

25 User input: Find the supplier for product with ID 10.

26 SQL query: SELECT s.CompanyName FROM Supplier s JOIN Product p ON s.SupplierID = p.SupplierID WHERE p.ProductID = 10;

27

28 User input: List the sales orders that have not been shipped yet.

29 SQL query: SELECT * FROM SalesOrder WHERE Status = 'Pending';

30

31 User input: How many employees work in the sales department?

32 SQL query: SELECT COUNT(*) FROM Employee WHERE Position LIKE '%sales%';

33

34 User input: List the top 5 most sold products.

35 SQL query: SELECT ProductID, SUM(Quantity) AS TotalQuantity FROM LineItem GROUP BY ProductID ORDER BY TotalQuantity DESC LIMIT 5;

36

37 User input: Find the total sales amount for orders completed this year.

38 SQL query: SELECT SUM(TotalPrice) FROM SalesOrder WHERE YEAR(OrderDate) = YEAR(CURDATE()) AND Status = 'Completed';

39

40 User input: List all suppliers from 'New York'.

41 SQL query: SELECT * FROM Supplier WHERE Address LIKE '%New York%';

42

43 User input: How many products are low on stock (below reorder level)?

44 SQL query: SELECT COUNT(*) FROM Product WHERE StockQuantity < ReorderLevel;

Key Points to Remember:

- Understand the Schema: Familiarize yourself with the database structure, knowing the tables, their relationships, and the data types of each column.

- Clear User Prompts: Encourage users to provide clear and specific details in their queries. For instance, instead of asking, “Give me sales numbers,” they should ask, “What are the total sales for product X in the last quarter?”

- Crafting System Prompts: Design system prompts that instruct the LLM on how to interpret user questions and structure SQL queries. This can include specifying the SQL dialect, the output format, and any constraints like date ranges or specific fields.

- Handling Multiple Tables: When dealing with complex schemas, include instructions on joining tables and managing relationships between them. For example, if a user wants to know about sales, you might need to join the Sales, Customers, and Products tables.

- Incorporate Examples: Include a few examples of natural language queries transformed into SQL queries. This provides the LLM with a pattern to follow.

- Test and Iterate: Test your prompts with a variety of queries to ensure they produce the correct SQL statements. Be prepared to refine your prompts based on these tests.

1 User Prompt: "How much did each product contribute to sales last year?"

2

3 System Prompt: "To answer, join the Product and SalesOrder tables, filter 4 orders from last year, and sum the sales for each product."

5 Example SQL: "SELECT Product.ProductName, SUM(SalesOrder.TotalSales)

6 FROM Product JOIN SalesOrder ON Product.ProductID = SalesOrder.ProductID 7 WHERE YEAR(SalesOrder.OrderDate) = YEAR(CURDATE()) - 1 GROUP BY Product. 8 ProductName;"

9 User Prompt: "List the top 5 customers by sales volume."

10

11 System Prompt: "Identify customers with the highest sales volume by

12 joining Customer and SalesOrder tables, grouping by customer, and

13 ordering by the sum of sales."

14 Example SQL: "SELECT Customer.FirstName, Customer.LastName,

15 SUM(SalesOrder.TotalSales) AS TotalSales FROM Customer JOIN SalesOrder

16 ON Customer.CustomerID = SalesOrder.CustomerID GROUP BY Customer.CustomerID

17 ORDER BY TotalSales DESC LIMIT 5;"

18 User Prompt: "What's the average sale per product category last month?"

SQL Query Validation:

Ensuring the correctness and efficiency of generated SQL queries is crucial. We’ll explore techniques for validating queries and handling common challenges, such as non-descriptive table and field names.

Create a Translation Mapping:

Develop a comprehensive mapping that translates technical identifiers (table names, field names) into more understandable or English terms. This helps bridge the gap between natural language queries and the actual database schema.

- Incorporate Mapping into LLM Input: Integrate this mapping directly into the system prompt or use it to pre-process user queries, making it easier for the LLM to understand and generate the correct SQL queries.

- Fine-tune the LLM with Custom Data: Consider fine-tuning the LLM on examples that include both the technical identifiers and their translations to improve its ability to handle such cases directly.

- Use Annotated Examples: Provide context for the LLM by including examples in prompts or training data that use technical identifiers in natural language questions and their corresponding SQL queries.

1 # Example mapping of technical identifiers to understandable terms

2 mapping = { "kunnr": "customer", "lifnr": "vendor"} # Add more mappings as needed

7

8 def translate_query(user_query, mapping):

9 for technical_term, common_term in mapping.items():

10 user_query = user_query.replace(common_term, technical_term)

return user_query

13 # Example usage

14 user_query = "Show sales for vendor last year"

15 translated_query = translate_query(user_query, mapping) # Now translated_query can be passed to the LLM for SQL generation

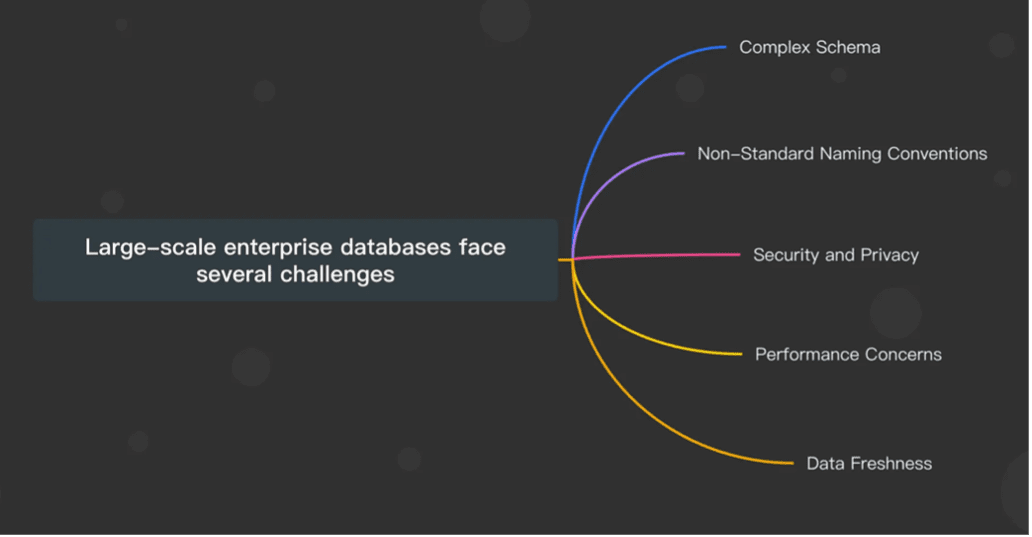

Solving a couple of problems on large-scale enterprise databases which faces several challenges:

Conclusion:

By harnessing the power of AI, organizations can streamline the data interaction process, enabling faster decision-making and unlocking new insights. Whether you’re a data analyst, developer, or business user, understanding AI-driven SQL query generation is essential for staying ahead in today’s data-centric world.

Get ready to supercharge your data workflows and unleash the full potential of your databases with AI-powered SQL query generation!

Reference:

- Boosting RAG-based intelligent document assistants using entity extraction, SQL querying, and agents with Amazon Bedrock | Amazon Web Services

- GitHub – ek2020/text2sql: text2sql